Clinical Problem

Each year more than one million men worldwide receive a prostate cancer diagnosis, making it one of the most common cancers among men. Prostate cancer is generally diagnosed through a combination of radiological and histopathological images, such as MRI and high-resolution microscopy images of prostate specimens. In recent years, several deep learning models have emerged for automated prostate cancer diagnosis on either of these modalities. However, these models are generally unimodal, meaning that they can handle only one modality as input and do not incorporate any information from the other modality. This is in stark contrast with the general workflow in the hospital where multidisciplinary boards bundle their expertise in different specialties to settle on a diagnosis. The development of multimodal models, which incorporate data from multiple modalities and thereby effectively combine expert information from different specialties, is a relatively underexplored area of research that is expected to significantly enhance performance on tasks such as prostate cancer diagnosis or prognosis.

Solutions

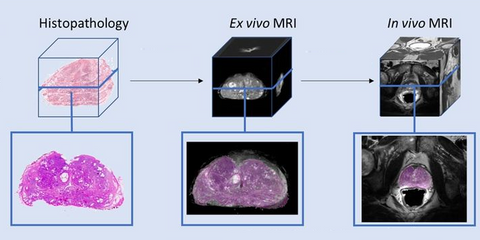

This master's project will be embedded in the multimodal AI research group in the Radboudumc where several PhD candidates work on developing multimodal AI models. This project will focus on one of the most crucial parts of the multimodal development pipeline, namely the registration of the different modalities. This registration step is essential to guarantee that the eventual multimodal model learns efficiently from the joint representation of the modalities. To facilitate the development of this multimodal registration method, we have gathered a rich cohort of 80 prostate cancer patients with their corresponding in vivo MRI, ex vivo MRI and digitized histopathology images. Hence, the primary objective of the project will be to develop and validate a multimodal registration pipeline for the case of prostate cancer. Within this project, you will have the opportunity to work with cutting-edge deep learning methods to solve some of the technical challenges of multimodal registration. One such research direction could be the exploration of image super resolution to bridge the gap between different image resolutions from different modalities.

Tasks

- Develop and validate deep learning methods for multimodal registration in prostate cancer.

Requirements

- Master students with a major in computer science, mathematics, biomedical engineering, artificial intelligence, physics, or a related area in the final stage of master level studies are invited to apply.

- Experience with programming in Python.

- Experience/interest in deep learning, medical imaging, and medical image analysis.

Practical Information

- Project duration: 6-12 months

- Location: Radboud University Medical Center, Nijmegen

- For more information, please contact Daan Schouten